AndBert

From AI Assistants to AI Agents: A Year of Rapid Evolution

It's been nearly twelve months since I last wrote about AI in coding, and frankly, the pace of change has been staggering. When I penned my thoughts on Rediscovering Coding with AI Assistants, I was exploring tools like GitHub Copilot and Claude AI as helpful companions in my return to hands-on development. Back then, these felt revolutionary enough. Today, that experience seems almost quaint.

A recent holiday week, punctuated by sleepless nights with a baby and being housebound, led me back to my laptop and a deeper dive into the current AI landscape. What I discovered has fundamentally shifted my perspective on where we're heading - and it's happening faster than I anticipated.

The Leap from Assistant to Agent

The difference between AI assistants and AI agents is profound. Where assistants like Copilot suggest code snippets or help debug issues, agents operate with genuine autonomy. They don't just help you code; they can manage entire development workflows from start to finish.

My exploration centred around Claude Code, and the experience has been eye-opening. This isn't just about getting coding suggestions anymore - it's about defining workflows that an AI agent can execute independently, with proper oversight and quality gates.

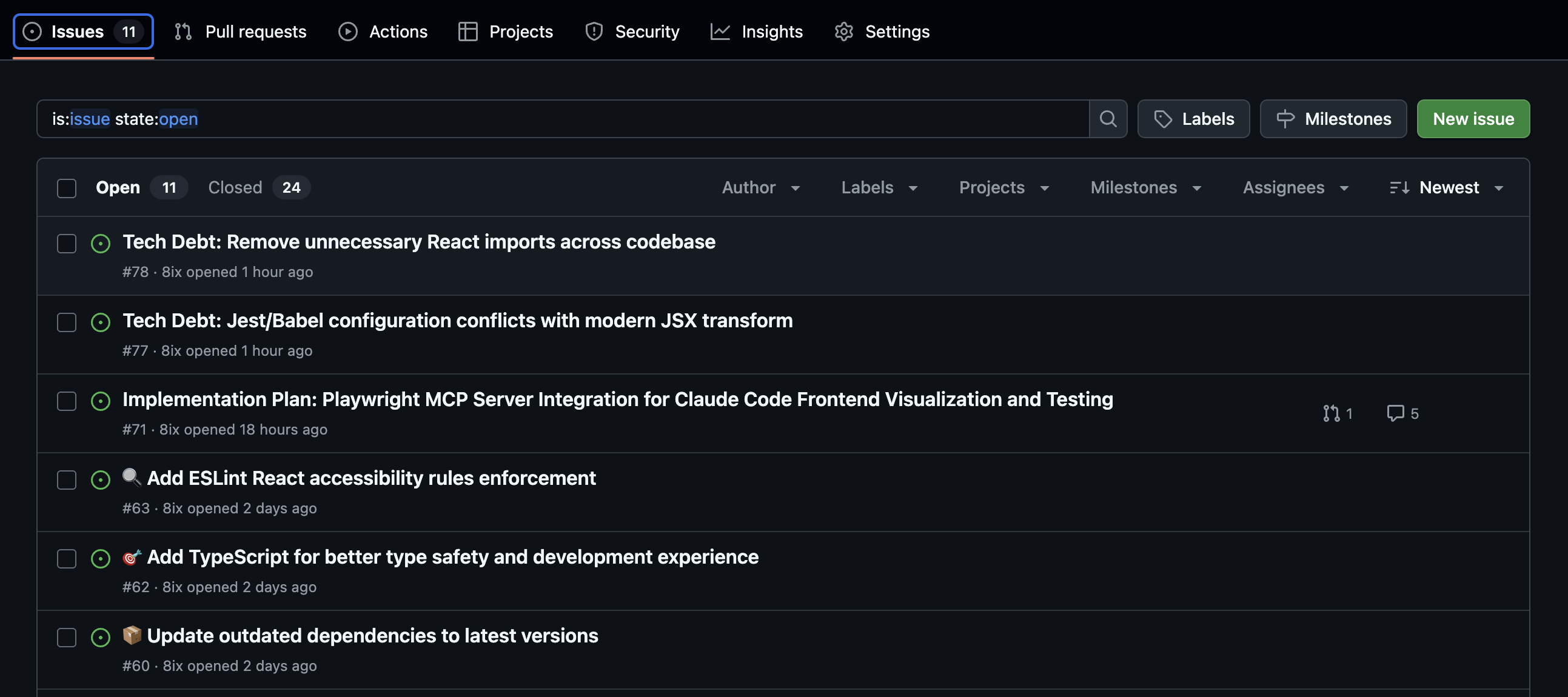

Figure 1: Claude Code managing its own GitHub Issues backlog, demonstrating autonomous task management

Figure 1: Claude Code managing its own GitHub Issues backlog, demonstrating autonomous task management

Building a Workflow That Actually Works

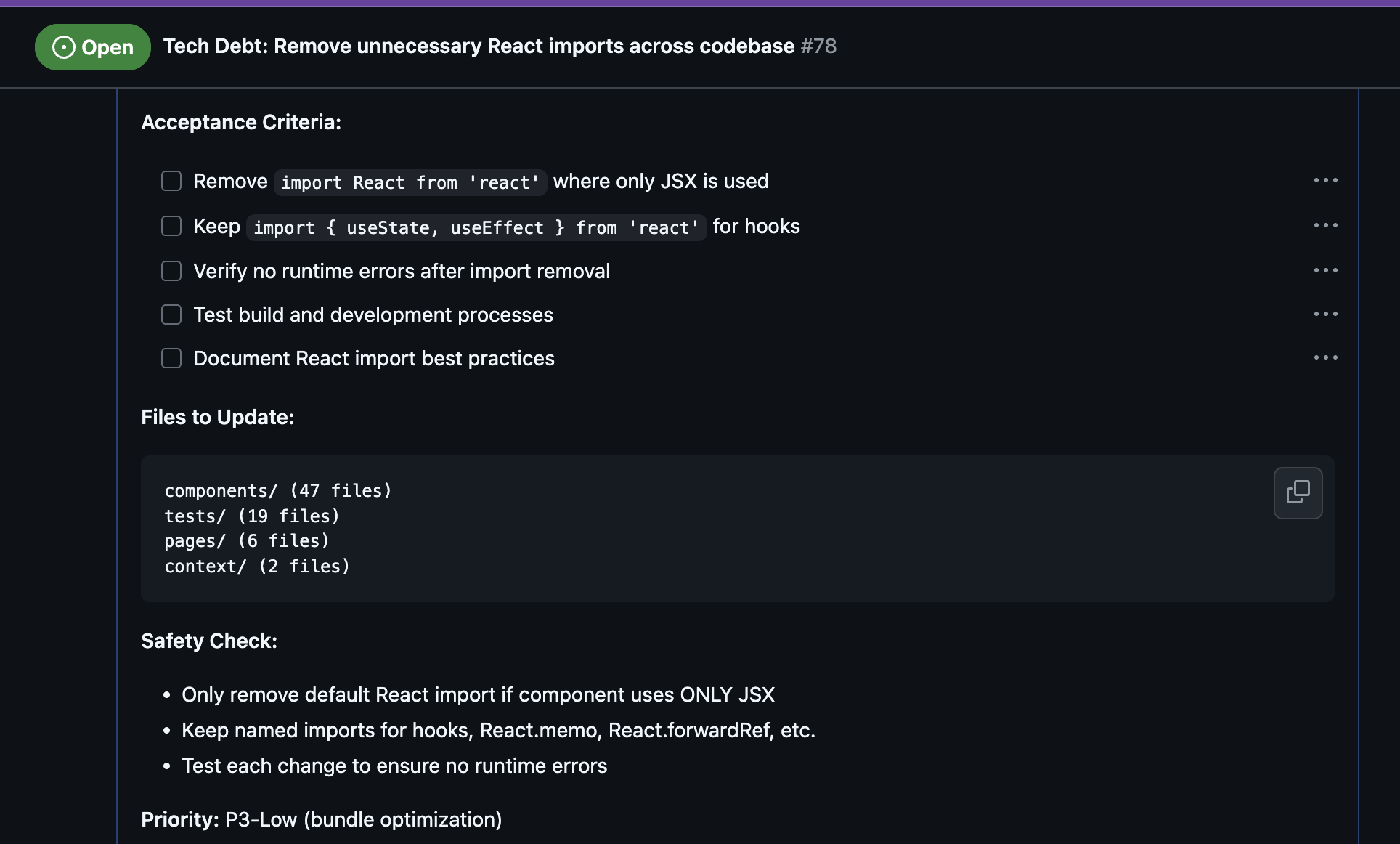

The real breakthrough came when I realised that AI agents thrive within well-defined boundaries. Rather than giving Claude Code free rein, I established a structured workflow using GitHub Issues as the foundation. The process is elegantly simple: I populate issues with detailed context and acceptance criteria, and the agent handles the rest.

Figure 2: Detailed acceptance criteria that Claude Code created and works to when tackling tasks

Figure 2: Detailed acceptance criteria that Claude Code created and works to when tackling tasks

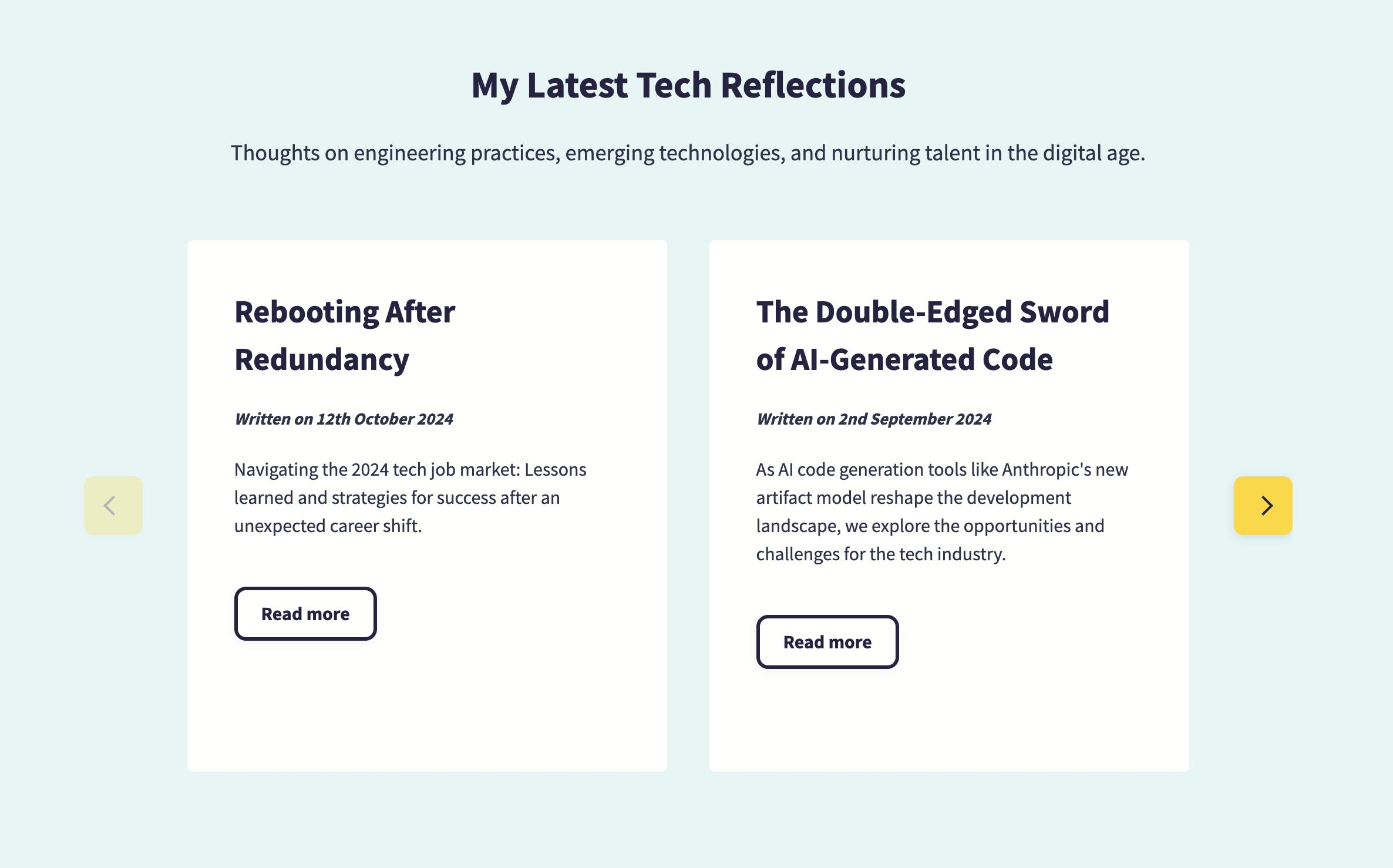

Here's what impressed me most: I asked Claude Code to redesign a lengthy blog listing area on my site. Not only did it condense the layout and implement a horizontal scroll solution, but because I'd integrated it with Playwright testing capabilities, it went further. It investigated the changes, toggled between light and dark modes, and even captured screenshots to verify the implementation worked correctly.

Figure 3: Claude Code's redesigned blog post carousel with horizontal scrolling and optimized layout

Figure 3: Claude Code's redesigned blog post carousel with horizontal scrolling and optimized layout

The level of thoroughness was remarkable, but it highlighted a crucial point: this sophistication requires significant groundwork. You need the technical expertise to set up proper testing infrastructure, define quality gates, and create workflows that hold the AI accountable.

Autonomous Excellence in Action

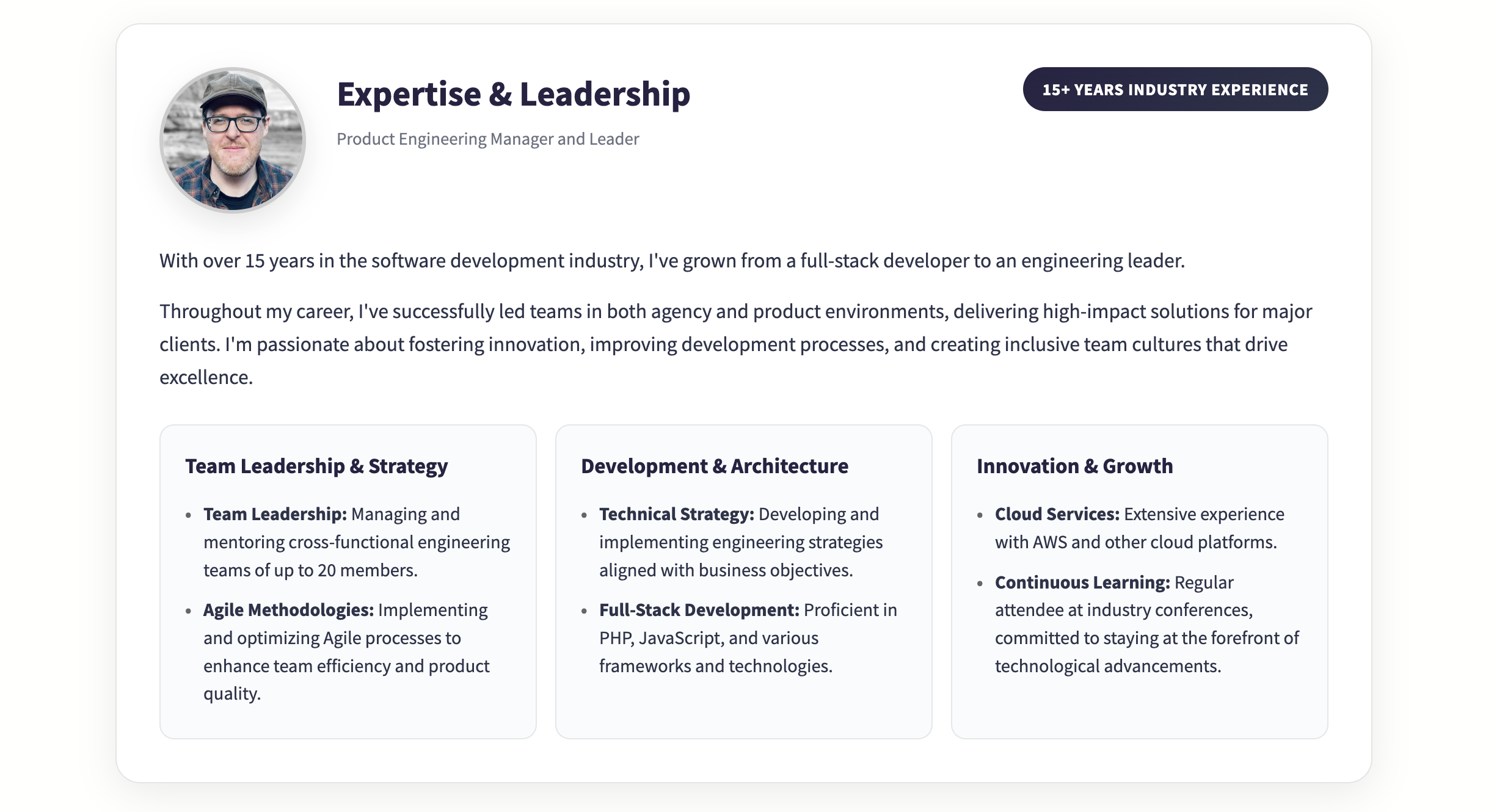

What sets this experience apart from my previous AI assistant encounters is the quality and completeness of the work. Claude Code didn't just implement features - it enhanced them beyond my original specifications.

Figure 4: The expertise and leadership section that Claude Code rebuilt and optimized with improved layout and visual hierarchy

Figure 4: The expertise and leadership section that Claude Code rebuilt and optimized with improved layout and visual hierarchy

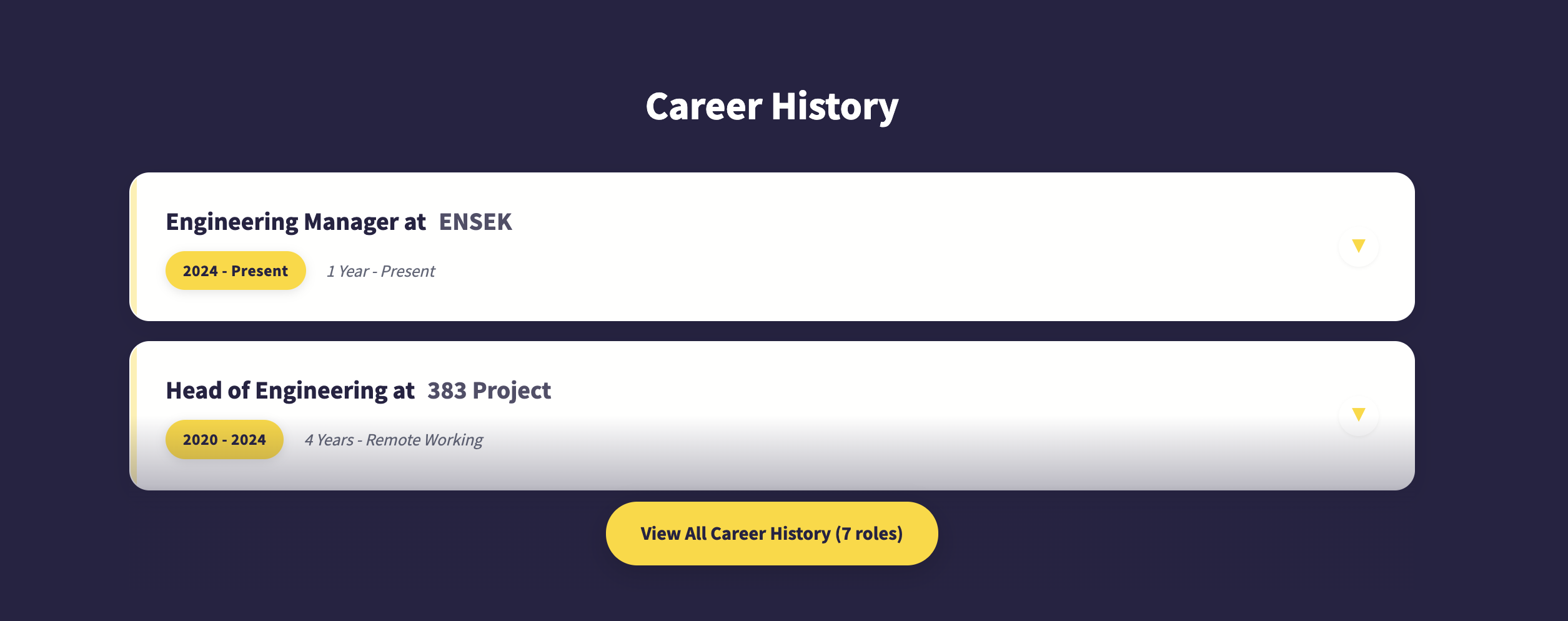

When working on my professional experience section, the agent not only improved the visual design but also made the career history interactive, adding thoughtful UI enhancements I hadn't even requested.

Figure 5: Interactive career history section with enhanced user experience features implemented autonomously

Figure 5: Interactive career history section with enhanced user experience features implemented autonomously

This level of proactive improvement represents a fundamental shift from AI assistants that wait for specific instructions to AI agents that understand context and optimize beyond the immediate request.

The Strategic Implications for Engineering Leadership

As an engineering leader, this experience has given me fresh insights into our evolving landscape. We're moving towards a future where developers become more like project reviewers and quality gatekeepers, requesting functionality and critically evaluating AI-generated solutions.

This shift brings both opportunities and challenges. Senior engineers who understand testing frameworks, code quality standards, and architectural principles will likely see their productivity soar. They can set up the infrastructure that enables AI agents to work effectively while maintaining the expertise to spot potential issues.

However, I'm concerned about the implications for junior developers. The traditional learning curve - writing code, making mistakes, and gradually building expertise - becomes more complex when AI generates the initial implementation. Junior developers may struggle to distinguish between good and poor AI-generated code, potentially leading to a proliferation of substandard solutions in the wild.

The Human in the Middle Approach

Despite the impressive capabilities I've witnessed, I remain convinced that we need humans firmly in the middle of these processes, at least for the foreseeable future. The most successful implementations I've seen combine AI efficiency with human oversight and strategic thinking.

This isn't about being cautious for caution's sake. It's about recognising that while AI agents can handle complex tasks autonomously, they work best within frameworks designed by experienced developers who understand the broader context, quality requirements, and potential pitfalls.

The key is establishing what I call "intelligent constraints" - boundaries that guide the AI agent while still allowing for creative problem-solving. In my case, this meant:

- Well-defined acceptance criteria in GitHub Issues

- Comprehensive testing infrastructure with Playwright

- Quality gates including linting, type checking, and build verification

- Clear documentation standards and commit message conventions

- Automated workflows that enforce review processes

Looking Ahead: What This Means for Teams

The organisations that will thrive in this new landscape are those that invest in both the technology and the human expertise to use it effectively. This means:

For Engineering Leaders:

- Understanding these tools well enough to guide strategic adoption

- Ensuring teams have the testing and quality infrastructure that makes AI agents effective

- Developing new approaches to mentoring junior developers in an AI-augmented environment

For Senior Engineers:

- Embracing the role of AI workflow architect and quality gatekeeper

- Developing skills in prompt engineering and AI system design

- Maintaining and deepening expertise in areas where human judgment remains crucial

For Junior Engineers:

- Focusing even more intently on understanding fundamentals and code quality principles

- Learning to critically evaluate AI-generated solutions

- Developing the testing and debugging skills that make effective AI collaboration possible

The Infrastructure Investment

One crucial lesson from my Claude Code experience is that AI agents require significant infrastructure investment to be truly effective. This isn't just about having access to the AI tool - it's about building the supporting ecosystem that enables autonomous operation:

- Comprehensive Test Suites: My site's 205+ tests across 12 test suites provide the safety net that allows AI agents to work confidently

- Quality Automation: ESLint, Prettier, and TypeScript checking catch issues before they become problems

- Visual Testing Integration: Playwright integration allows the AI to verify its own work visually

- Clear Documentation: Well-maintained project documentation helps the AI understand context and conventions

- Structured Workflows: GitHub Issues with detailed acceptance criteria provide clear success metrics

Without this foundation, AI agents become less effective and potentially introduce more problems than they solve.

Final Thoughts

The evolution from AI assistants to autonomous agents represents more than just a technological upgrade - it's a fundamental shift in how we approach software development. The pace of change over the past twelve months has been remarkable, and I suspect we're only seeing the beginning.

As leaders, our role isn't to resist this change but to thoughtfully integrate these powerful tools while maintaining the human expertise and oversight that ensures quality outcomes. The future belongs to teams that can effectively blend AI efficiency with human wisdom, creating a collaborative approach that leverages the best of both.

The landscape is changing rapidly, but the core principles of good engineering leadership remain constant: foster learning, maintain quality standards, and always keep the bigger picture in mind. AI agents are powerful tools in service of these goals, not replacements for the strategic thinking that drives successful engineering teams.

What excites me most about this evolution is not just the productivity gains, but the potential for AI agents to handle routine tasks while freeing human developers to focus on the creative, strategic, and complex problem-solving work that truly drives innovation. The key is ensuring we build the right frameworks to harness this potential effectively.

Related Articles

Explore more insights on similar topics and technologies.

The Double-Edged Sword of AI-Generated Code

As AI code generation tools like Anthropic's new artifact model reshape the development landscape, we explore the opportunities and challenges for the tech industry.

Read moreReady, Set, Done: Transforming Teams with Clear Definitions

Discover how implementing clear Definitions of Ready and Done in teams can significantly improve your product team's performance and productivity.

Read moreCrafted with :