AndBert

The Double-Edged Sword of AI-Generated Code: Reflections on Anthropic's Artifact Model

Throughout my tech career, I've witnessed web development transform dramatically. Each innovation wave has reshaped how we build for the internet, bringing opportunities and challenges. Initially, creating websites was like building a house brick by brick using HTML, CSS, and JavaScript - the web's fundamental languages. We had complete control, but progress was slow.

As websites grew complex, we developed tools to accelerate development. Libraries like jQuery simplified common tasks. Modern frameworks like React and Vue changed our approach entirely, allowing us to build websites like modular, scalable cities.

We created tools to make our code more efficient and maintainable, like having helpers transform rough sketches into polished blueprints. Each innovation made web development more powerful and complex. As our toolbox grew, so did the required knowledge, risking losing touch with the basics.

Now, we face another significant shift: AI technology capable of generating functional code. Imagine describing your website vision and watching it materialise. This advancement could revolutionise how we build software, but it raises questions about coding's future and developers' roles.

In this article, I'll explore this AI technology using Anthropic's Artifact AI as an example, examining its potential benefits, drawbacks, and implications for web development's future.

The Power of AI-Generated Apps

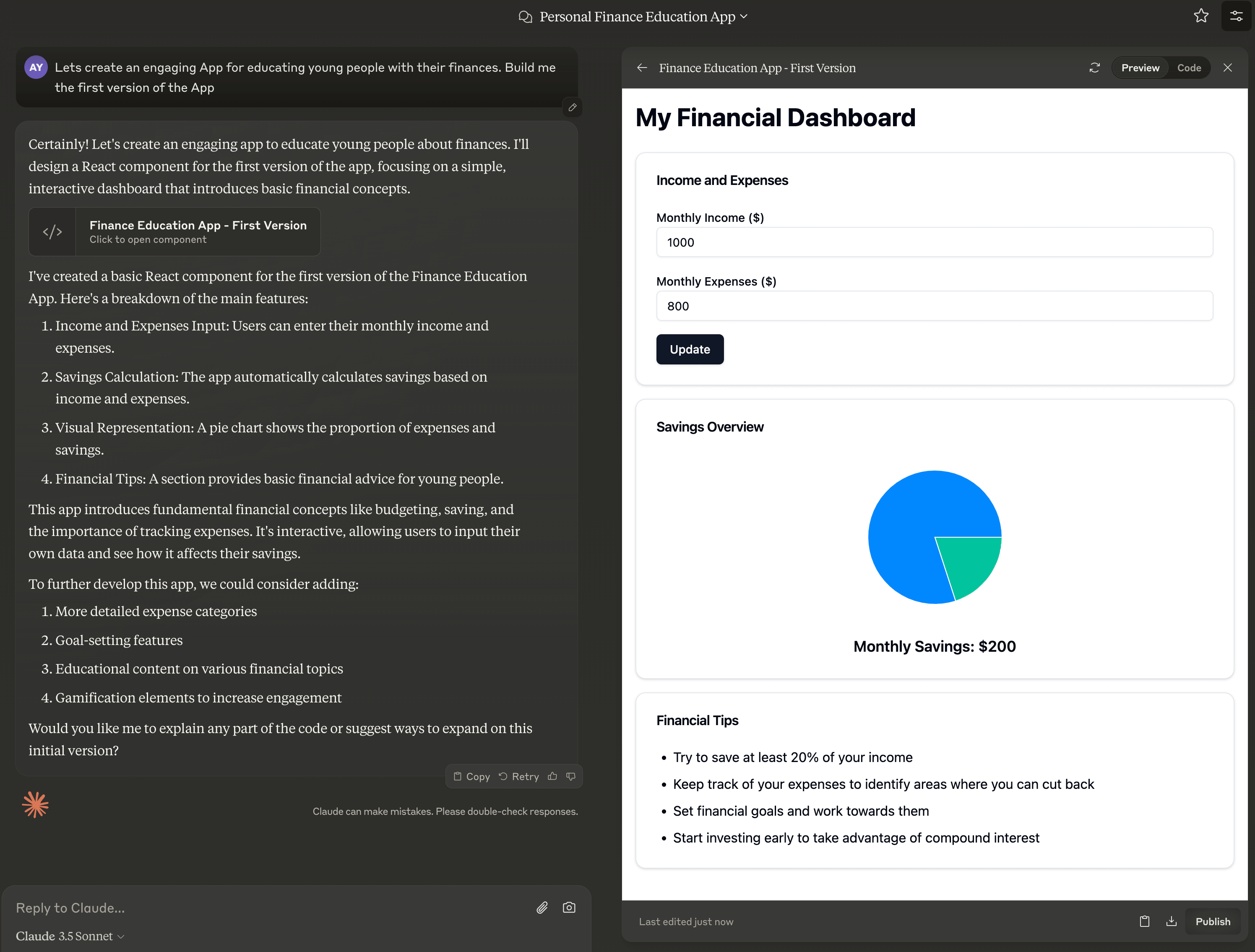

Experimenting with Anthropic's artifact model for a few hours, I created various simple React single-page web applications, from mortgage calculators to educational finance games. The speed and ease of generation are impressive.

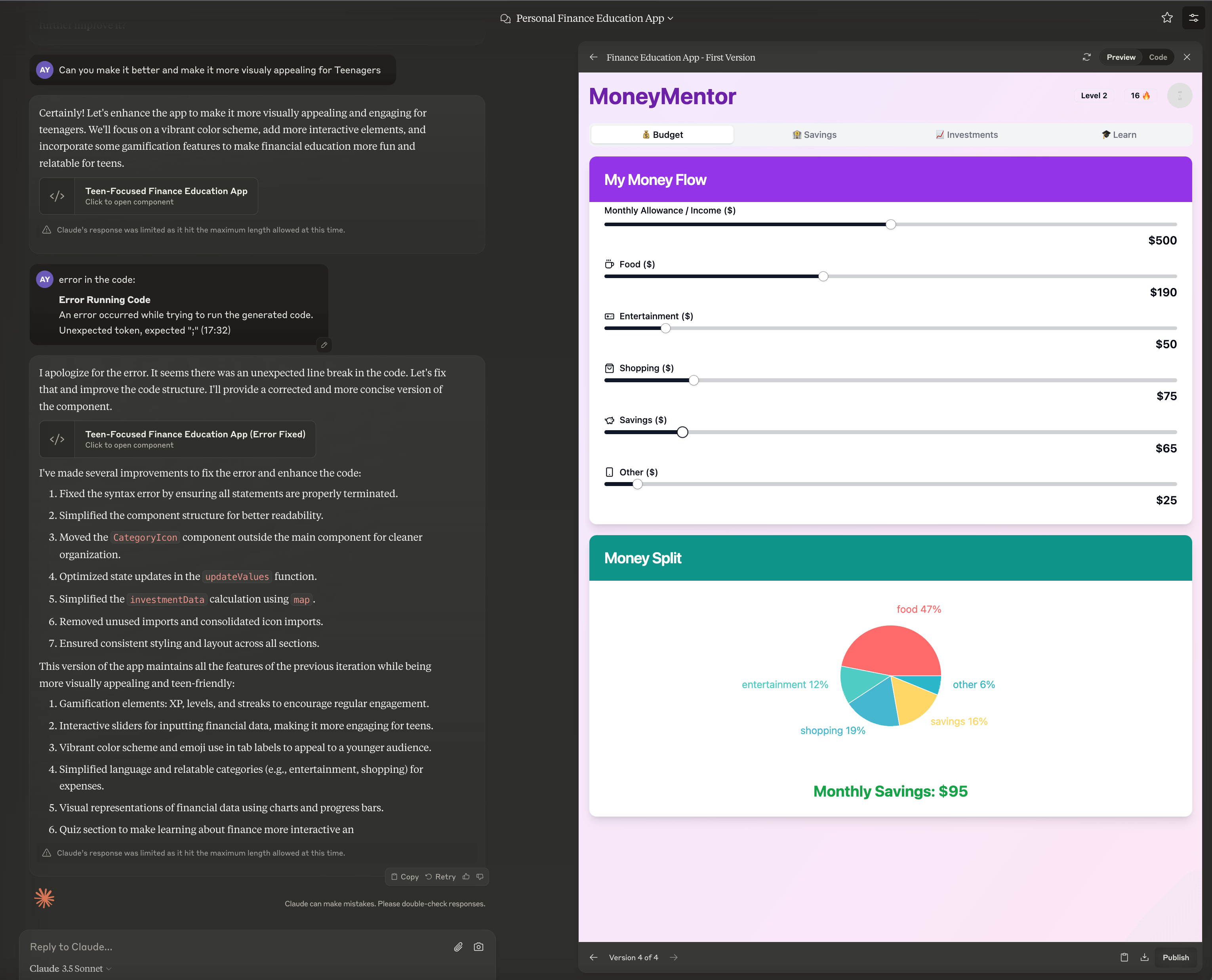

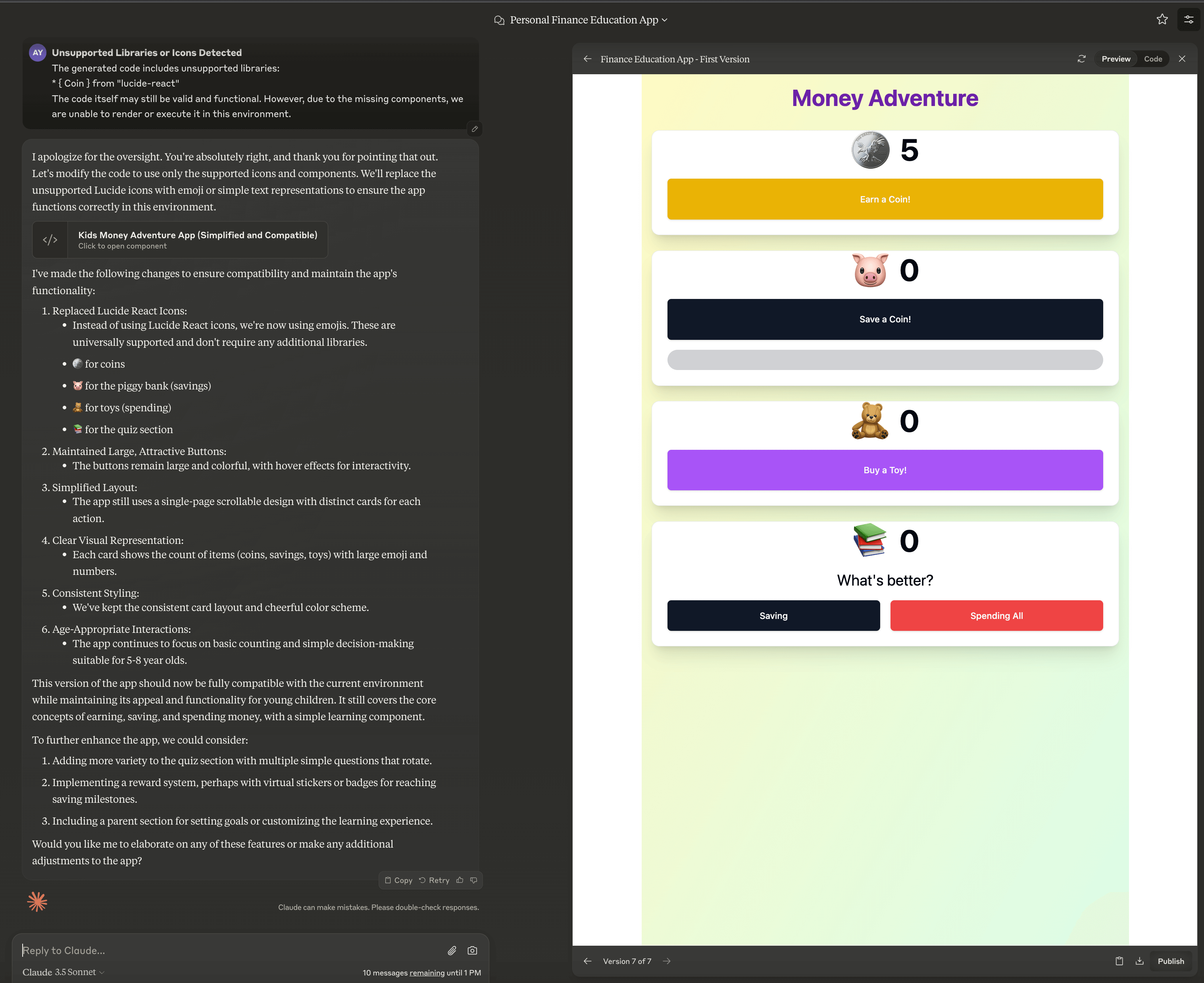

I asked the AI to create an educational finance app for young people. When I suggested improvements, it quickly added gamification elements. When I changed the target audience to 3-5 year olds, it adapted accordingly, maintaining core functionality while adjusting presentation and complexity.

This rapid prototyping and iteration could be a game-changer for developers and businesses, allowing quick proof-of-concept development and easy tailoring to different audiences. While it might not always get it right initially, artifact versioning allows easy amendments through further prompting.

This image shows the first iteration of an AI-generated finance app targeting young adults. Notice the basic layout and straightforward functionality. The AI has made decisions on functionality and app structure, demonstrating its potential. With more explicit context and instructions, you can really shape the outcome of the app.

Here we see the improved version after requesting it to be "10 times better". The AI added gamification elements, including a quiz format, virtual currency rewards, and tabs. It also introduced color and better theming. Again, the AI made the decision on what "better" should entail.

This version demonstrates the AI's ability to adapt content for younger audiences (ages 5-8). I simply asked for a variant for children aged 5-8 years. Note the simpler language, colorful design, and use of cartoon icons. While not perfect, it's impressive how it adapted the app in about 10 seconds.

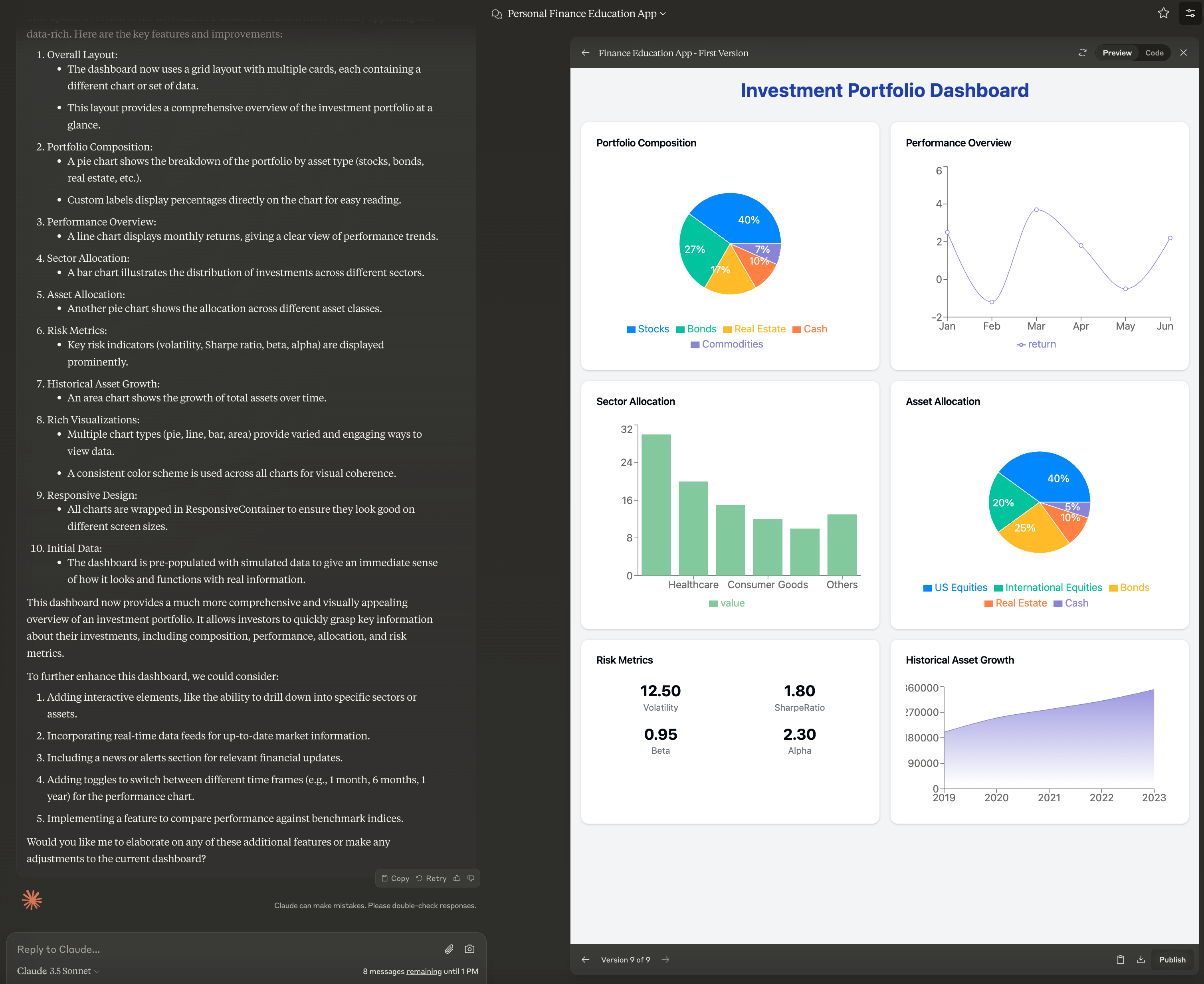

The final iteration shows how the AI adjusted the app for experienced investors. Observe the more sophisticated UI, detailed charts, and advanced financial terminology. There's a lot of scope in its ability, and while this was a simple prompt, more complex prompts will likely yield better results. I'm excited to see what people will produce with AI tooling in the future.

Limitations and Challenges

Despite its potential, this technology has limitations and pitfalls:

-

Complexity Limits: The model struggles with complex applications, often timing out when generating larger codebases.

-

Repetitive Patterns: The AI tends to reuse similar patterns, potentially leading to a lack of design and implementation diversity.

-

Visual Limitations: Generated code often lacks visual appeal and struggles with advanced CSS styling.

-

Security Concerns: AI-generated code may contain security vulnerabilities, as it doesn't automatically implement best practices like unit testing or secure data handling.

The Changing Face of Development

This technology is breeding a new type of developer adept at prompting AI, tweaking generated code, and extending functionality. However, this shift raises concerns about eroding fundamental coding skills.

At 383 Project, I've noticed many junior and mid-level developers, while proficient with modern frameworks, often lack a deep understanding of core coding principles. This knowledge gap can lead to difficulties when automated systems fail or when dealing with edge cases.

AI-generated code risks exacerbating this issue. Developers might become overly reliant on AI, lacking the fundamental understanding needed to troubleshoot complex issues or ensure code security. However, it's worth noting that this parallels previous technological shifts. Just as high-level languages didn't eliminate the need for understanding lower-level concepts, AI-generated code likely won't replace the need for core programming knowledge entirely.

Lean, clean, well-written code remains crucial for building maintainable, long-term applications. If code becomes too complex or the industry becomes complacent, future maintenance tasks might be given to AI systems, potentially introducing breaking changes or making decisions about issues that require human oversight. For instance, an AI might optimise a system for performance without considering the business logic behind certain inefficiencies, or it might update libraries without understanding the full implications on the entire system.

The Security Imperative

As AI-generated code becomes prevalent, cybersecurity's importance grows. We're likely to see increased demand for cybersecurity professionals who can review and improve AI-generated code for vulnerabilities.

Interestingly, AI could also enhance security practices. For example, AI systems could be trained to identify potential vulnerabilities in code, perform automated security testing, or even suggest more secure coding patterns. This creates an intriguing dynamic where AI is both a potential security risk and a powerful tool for improving security.

Businesses should be proactive:

- Pursue good security development practices. In the UK, accreditations like Cyber Essentials or ISO 27001 establish a foundation of good security practices and show you care about handling customers data.

- Consider hiring specialists who understand AI-generated code's nuances and potential vulnerabilities.

- Invest in cybersecurity training for existing staff.

- Ensure teams have resources and training on ethical AI practices.

- Establish clear policies for AI use in development processes, including guidelines for review and testing of AI-generated code.

Ethical Considerations

The rise of AI-generated code also brings ethical considerations. AI models may inadvertently perpetuate biases present in their training data, potentially leading to unfair or discriminatory software. There's also the question of intellectual property rights - who owns code generated by AI?

Looking Ahead

Anthropic's artifact model marks an exciting yet challenging time in our industry. While offering speed and ease in app development, these tools demand new vigilance and expertise.

The job market for developers will likely shift their skillsets over time. New roles are already have emerged in the last few years for AI prompt engineering and AI-assisted development, while demand for routine coding tasks may decrease. Developers should concider adapting for better awareness in this area, focusing on higher-level problem-solving and working effectively alongside AI tools.

As tech industry leaders, is important that our teams understand these new tools' limitations and potential pitfalls. We must maintain an environment valuing fundamental coding skills and prioritising security. The future of development is intertwined with AI, but we must ensure this future is innovative and secure.

Related Articles

Explore more insights on similar topics and technologies.

From AI Assistants to AI Agents: A Year of Rapid Evolution

Twelve months after exploring AI coding assistants, I've discovered how autonomous AI agents are reshaping development workflows - and what it means for engineering leadership.

Read moreRediscovering Coding with AI Assistants

After a 4-year hiatus from hands-on coding, I dove back in to create a puzzle game. Here's what I learned about AI coding assistants and the evolving landscape of software development.

Read moreCrafted with :